|

I am an Associate Principal Robotics Research Engineer at Dyson Technology Ltd., where I have spent the last 5 years leading research in robotic manipulation for future domestic robots. Some of our work was publicised here. I completed my PhD in Computer Science at the University of Birmingham in October 2019. I worked in the Intelligent Robotics Lab, focusing on robot learning and dexterous grasping. I have contributed to key research in robot grasping and patents in autonomous robotic cleaning technologies. I am passionate about mentoring and advancing robotics and AI to shape the future of intelligent automation.

I have worked on active vision for robotic grasping in the PaCMan project. Before being a postgraduate student, I received a Bachelors in Computer Science at the Center for Informatics, Federal University of Pernambuco. I have also been part of the VoxarLabs, where I worked on computer vision, augmented and virtual reality research and applications. Google Scholar / GitHub / LinkedIn / CV |

|

|

I am interested in how closing the loop between perception and action can improve performance in manipulation tasks for robotics. My research focus is robot perception, machine learning and control. |

> >

|

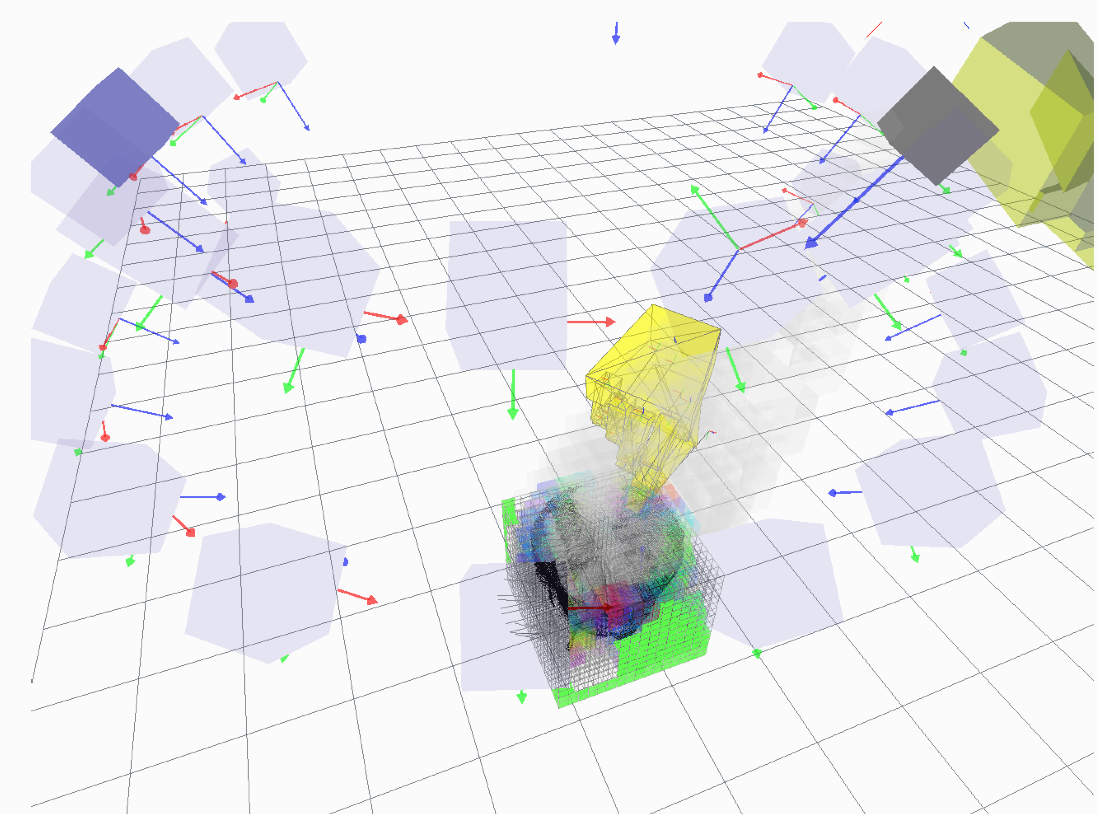

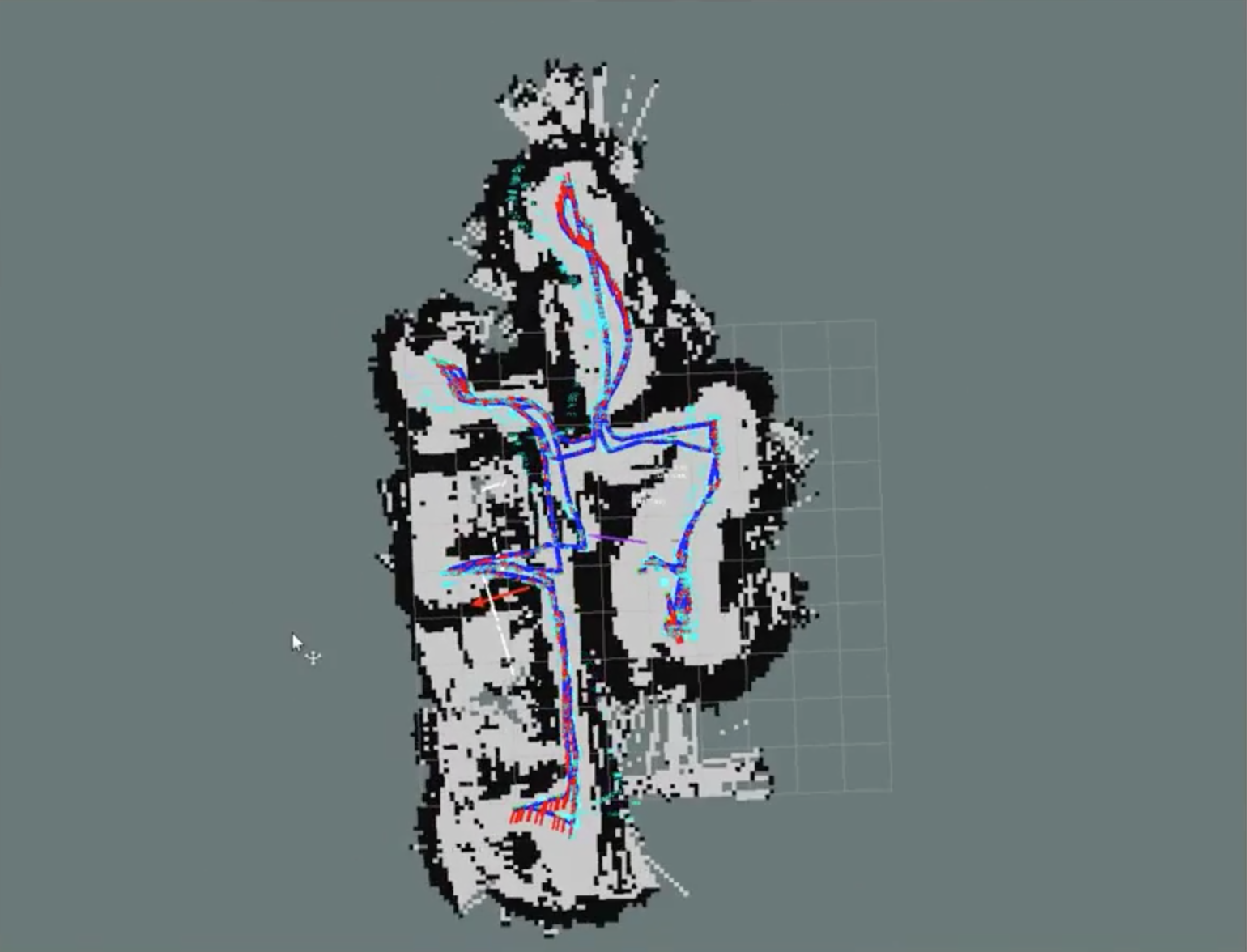

This work introduces a planner that makes use of an uncertain, learnt forward (dynamical) model to plan robust push manipulation. The forward model of the system is learned by poking the object in random directions and is then utilised by a model predictive path integral controller to push the box to a required goal location. By utilising path integral control, the proposed approach is able to avoid regions of high predictive uncertainty in the forward model. Thus, pushing tasks are completed in a robust fashion with respect to estimated uncertainty in the forward model and without the need of differentiable cost functions. |

> >

|

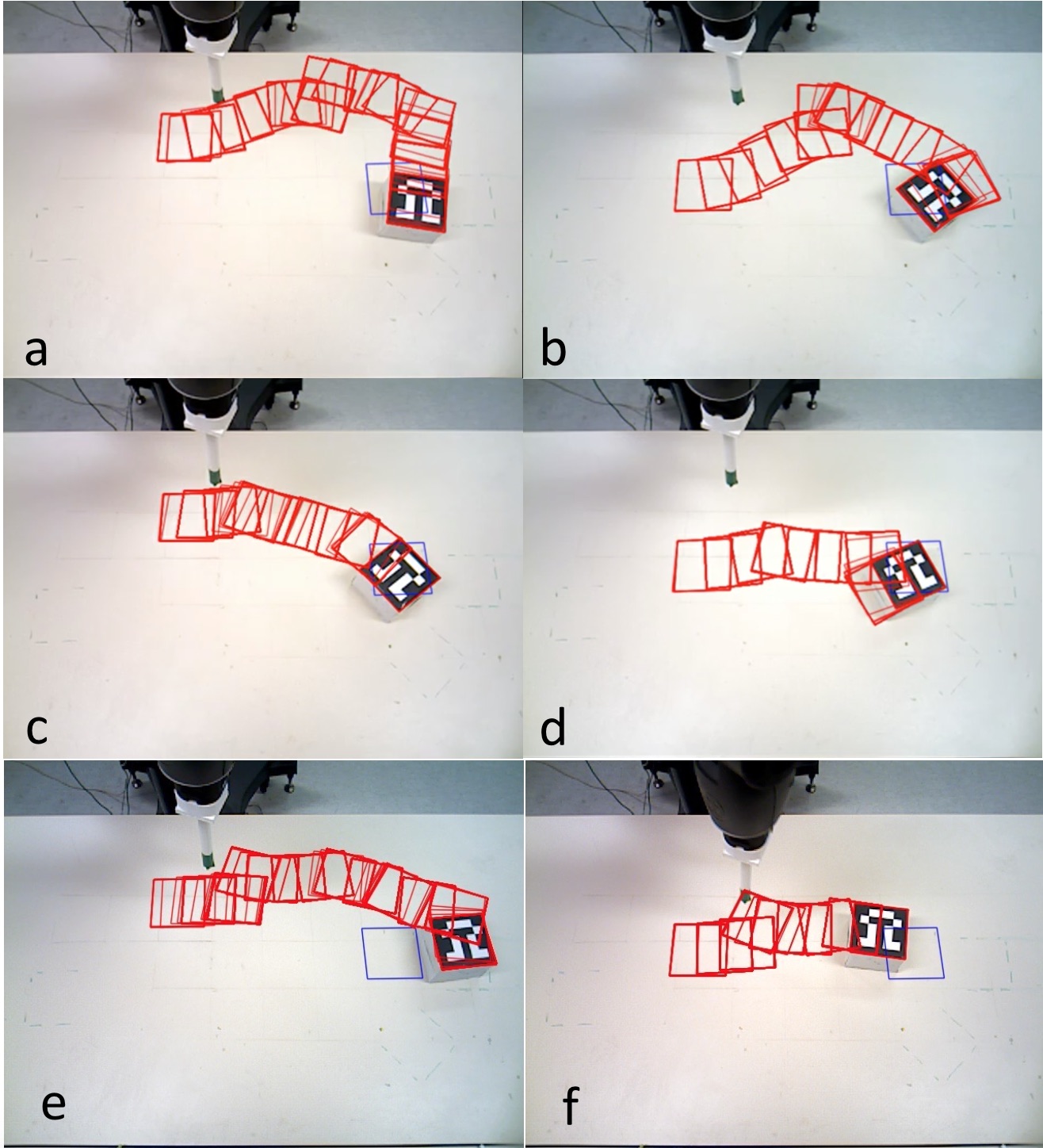

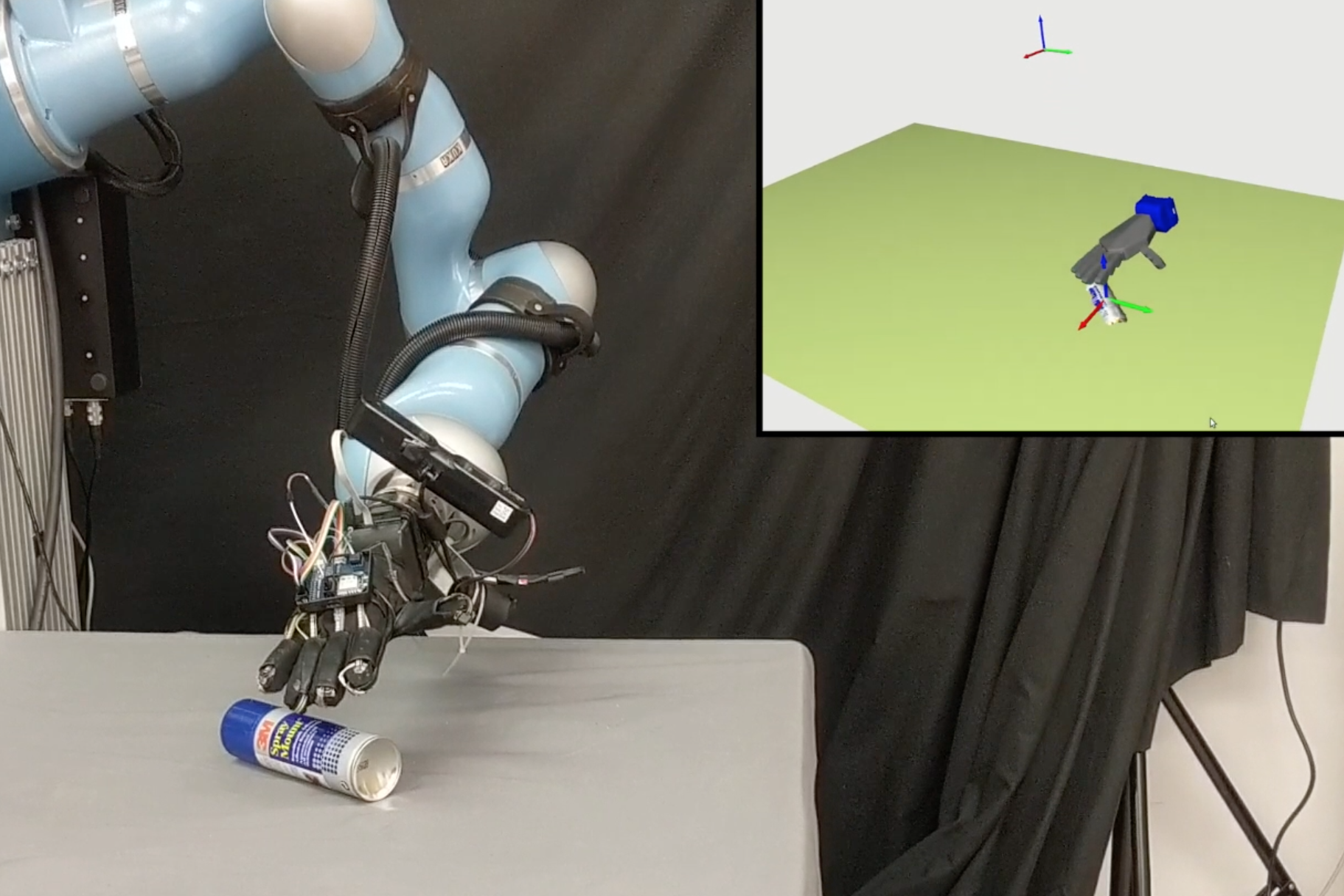

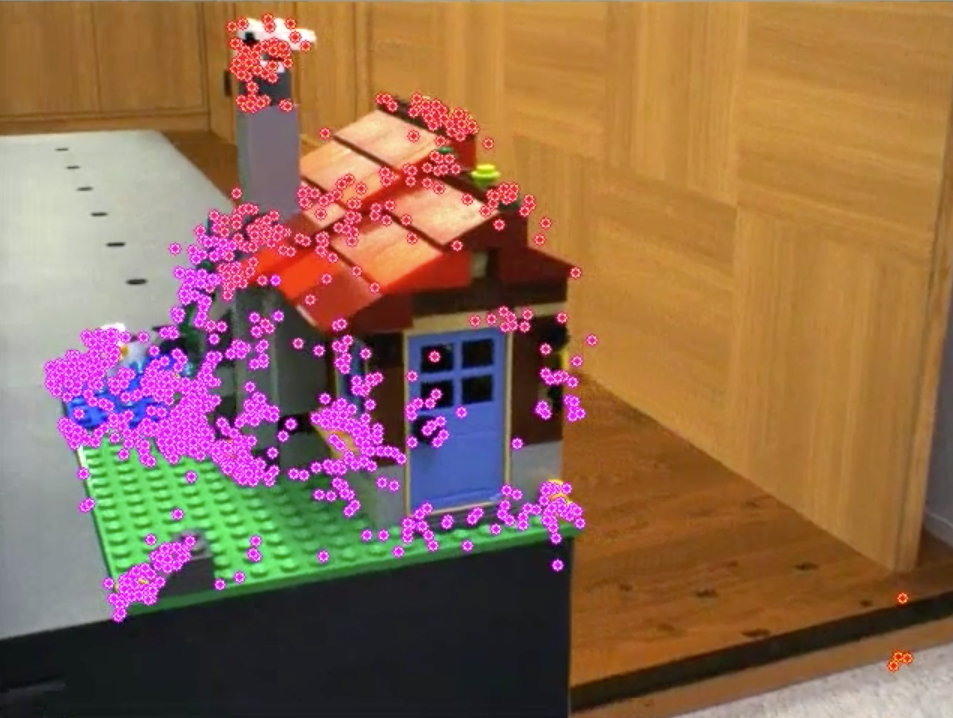

We tackled the problem of improving robot grasp performance using active vision. We sought to increase grasping success via two view selection heuristics: one that would allow the robot to explore good quality grasp contact points, and another that would permit the robot to investigate its workspace to make sure candidate grasp trajectories would not lead to collisions with unseen parts of the object to be grasped. Our results showed that this approach yielded better grasp success rate when compared to a random view selection strategy, while using fewer camera views for grasp planning. |

|

|

> >

|

|

|

|

> >

|

|

|

|

|

|

|

|

|

|

|

|

|

|